In a blog post shared on Thursday, Google announced the release of Gemini 1.5, its next-generation AI model that promises significant improvements in performance and efficiency. Among the enhancements is the ability to process and understand vast amounts of information, up to 1 million tokens at a time.

What is Gemini 1.5?

Building upon the success of Gemini 1.0, the latest iteration utilizes a new Mixture-of-Experts (MoE) architecture which divides the AI model into smaller specialized networks. Google says this allows for more efficient processing and training while maintaining high performance. Because of this, Gemini 1.5 will be able to handle multimodal inputs, including text, images, audio, and video, with better accuracy and understanding.

One of the notable features of the new model is the extended context window. While the previous model could only handle up to 32,000 tokens, Gemini 1.5 can process up to 1 million tokens. This allows it to process, analyze, and reason over a larger volume of text, code, video, and audio, even if they’re added in a single prompt.

The extended context window unlocks new functionalities:

- Complex Reasoning: Gemini 1.5 can analyze massive amounts of information, like the entire transcript of the Apollo 11 mission, identifying details and reasoning across the entire document.

- Multimodal Understanding: The model can process different media types, like analyzing the plot of a silent movie based on visuals alone.

- Relevant Problem-Solving: When presented with large codebases, Gemini 1.5 can suggest modifications and explain how different parts interact.

Google has also revealed that Gemini 1.5 outperforms Gemini 1.0 Pro on 87% of tasks and matches the performance of Gemini 1.0 Ultra, even with its larger context window.

Access and availability

Google is offering a limited preview of Gemini 1.5 Pro to developers and enterprise customers with a 128,000 token context window. The eligible users can also test the 1 million token window at no cost but with longer latency. The company also plans to introduce pricing tiers based on context window size in the future.

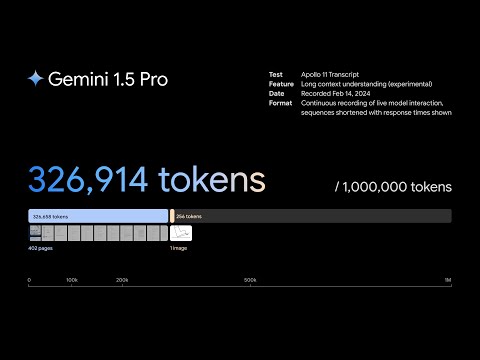

Gemini 1.5 Pro Demo by Google

Here’s a video that Google shared on YouTube that showcases its long context understanding ability through a live interaction using a 402-page PDF transcript and multimodal prompts. The demonstration involves a continuous recording of the model’s responses, with response times indicated. The total token count for the input PDF (326,658 tokens) and image (256 tokens) is 326,914, while the text inputs increase the total to 327,309 tokens.