What to know

- Midjourney has deployed the ability to create consistent characters across images and styles.

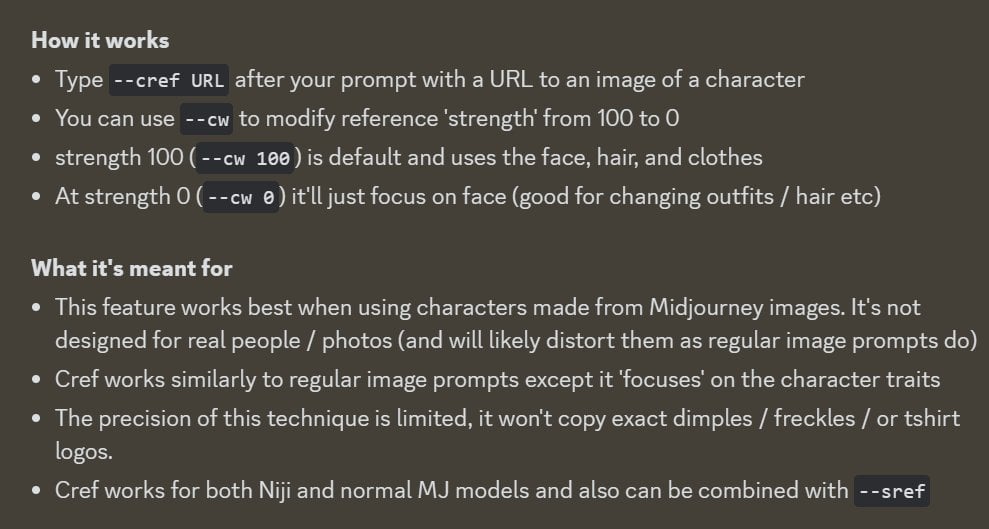

- Type

--cref URLafter your prompt with a URL to an image of a character. You can further control the strength of the reference with the--cwcommand. - Character References work best when used with Midjourney characters.

For all its fascinating capabilities, AI image generation is riddled with issues. One of the chief reasons it cannot be used professionally is its lack of consistency in character generation. But all that may soon be history, for Midjourney now lets you create the same character across new images and styles with a new ‘cref’ command (short for ‘character reference’).

Midjourney can generate consistent characters with its character reference (cref) feature

The ability to create consistent characters has been one of the most requested features, and understandably so, since it opens up new possibilities for creators of all types, including professionals, who may want to integrate AI into narratives.

Testing @midjourney's –cref. This is my first try, first batch. Looks like it's working.

It means we can tell sequential stories with consistent characters in MJ images. I don't know if it's the most technical feature, but from a storytelling pov, it's the most important ever. pic.twitter.com/7zNLFYs4iI

— Alain Astruc (@alainastruc) March 12, 2024

Midjourney’s Discord channel delineates how the feature can be used. Simply put, you need only enter a prompt followed by the ‘cref’ command and the URL of the image whose characters you want to use as reference.

You can further control how similar or different the final result is from the reference image by modifying the character weight (or cw) on a scale from 0 to 100. The higher the number the more closely the result will follow the reference; the lower the number, the more varied the results will be compared to the reference.

Already users are having a field day with the character reference and character weight commands and generating characters that are consistent across different images and styles, spurring new narratives by placing them in new environments, giving them different props, and otherwise opening up creative possibilities.

A few more consistent character tests in Midjourney

top row → test of –cw values on clothing

middle → seeing how it transfers emotions

bottom → using –cref with different actionsprompts & some notes in thread: pic.twitter.com/i6Ec1qgYis

— Nick St. Pierre (@nickfloats) March 12, 2024

But, as pointed out by creators as well as any layperson looking at the generated art, the results are not always truly ‘consistent’. The devil, as they say, is in the details. Depending on how much time one spends generating a character consistently across images, there could be big unintended changes, especially when it comes to the facial features.

But all such things are bound to get better with further iterations and, as pointed out by one Reddit user, “this is as shitty as it’s ever going to be! I’m so excited for the future!”