What to know

- Opera is the first browser to add built-in support for local LLMs, about 150 of them.

- Local LLMs are ideal for enhanced privacy and security as well as having offline access to the AI models. Their speed, however, is dependent on your machine’s capabilities.

- Access to local AI models is available in the developer stream of Opera One.

Opera is integrating support for 150 local LLM (Large Language Model) variants into the developer stream of Opera One – the company’s AI-integrated browser. The addition of experimental local support for AI is the first for a browser and allows for easy access to AI models right from the browser itself.

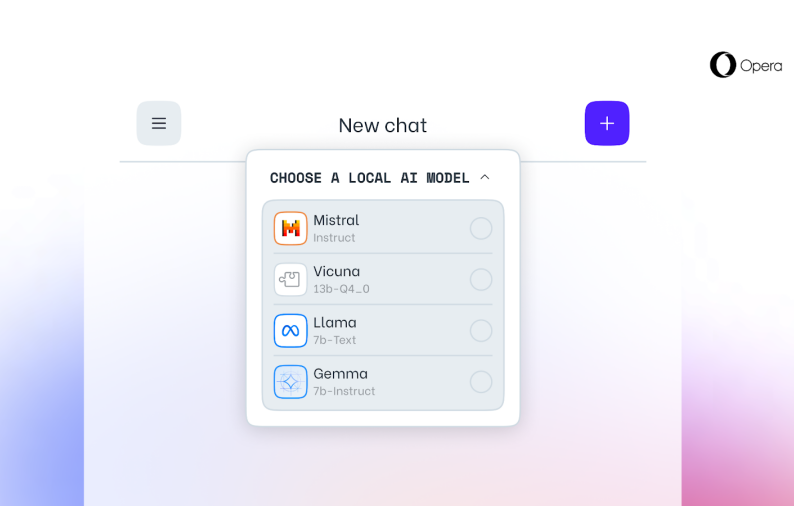

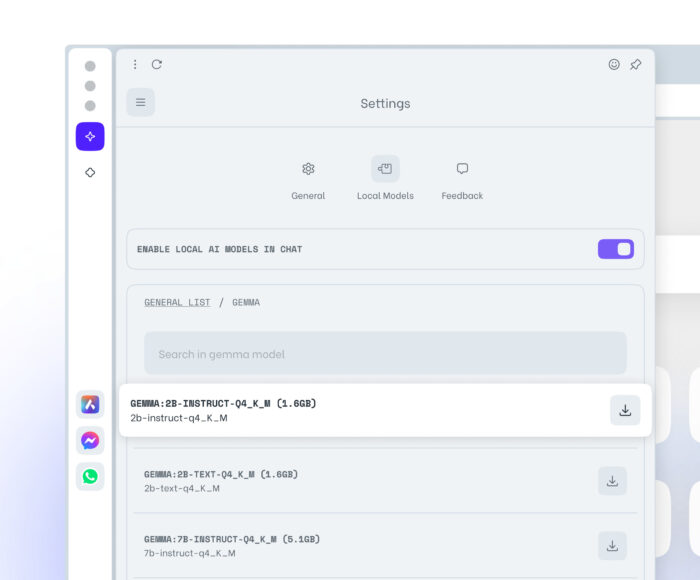

Some of the more prominent local LLMs in Opera One include the likes of Llama from Meta, Gemma from Google, Vicuna, and Mixtral from Mistral AI. These are in addition to Opera’s very own Aria chatbot.

Opera One Developer users can start by updating to the latest browser version, then selecting the models they want to test, activating the new feature, and downloading the local LLM to their machine. A typical LLM will require 2 to 10 GB of storage. Once downloaded, you’ll be able to switch to and use the local LLM instead of Aria. Refer to our guide on How to Enable and Use Local AI Models on Opera One Developer to know more.

Local LLMs have several benefits, such as better privacy and security, since the data doesn’t leave your machine, offline usage, and enhanced browser experience on the whole. The one drawback that a local LLM might have is that it could be slow in providing outputs compared to server-based LLMs as the processing is dependent wholly on your computer’s hardware capabilities. So even though it would work just fine for most modern machines, older devices could feel the resource pinch.

Nevertheless, the integration of local AI models into the browser itself is a big step forward for Opera, and we might yet see this becoming the trend amongst other major browsers.