Meta faces criticism for allegedly manipulating AI benchmarks with its Maverick model, sparking ethical concerns in the AI community.

What to know

- Meta has been accused of manipulating AI benchmarks using its Maverick model.

- The alleged actions involved tailoring the model to excel in specific tests, raising ethical questions.

- Critics argue this undermines trust in AI evaluation standards and transparency.

- Meta has not yet provided a detailed response to these allegations.

Meta, the tech giant behind platforms like Facebook and Instagram, is under scrutiny for allegedly manipulating artificial intelligence (AI) benchmarks with its Maverick model. Reports suggest that the company tailored the model to perform exceptionally well on specific AI evaluation tests, a practice that critics say compromises the integrity of AI benchmarking systems.

AI benchmarks are widely used to assess the performance and capabilities of machine learning models. They serve as a standard for comparing different AI systems, ensuring transparency and fairness in the rapidly evolving field. However, allegations against Meta suggest that the company may have exploited these benchmarks by optimizing its Maverick model to achieve high scores without necessarily improving real-world performance.

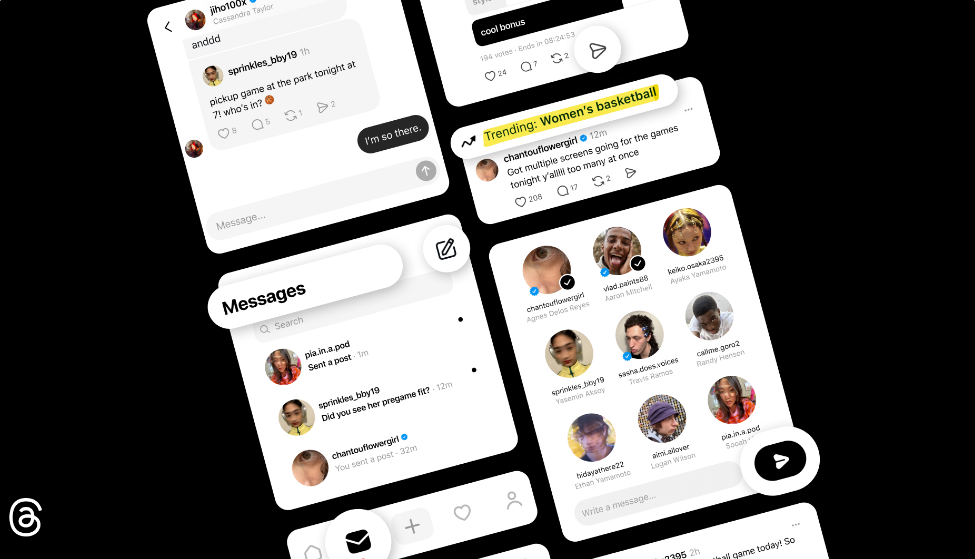

this would explain it: “optimized for conversationality” pic.twitter.com/5iGPpFOIEF

— Zain (@ZainHasan6) April 6, 2025

Such practices, if proven, could have far-reaching implications for the AI industry. Benchmark manipulation not only undermines trust in evaluation standards but also creates an uneven playing field for competitors. Researchers and industry experts have expressed concerns that this could stifle innovation and mislead stakeholders about the true capabilities of AI systems.

Meta has not yet issued a comprehensive statement addressing these allegations. The company’s silence has fueled further debate about the need for stricter regulations and oversight in AI development and evaluation. As the AI community grapples with these ethical challenges, the incident serves as a reminder of the importance of transparency and accountability in technological advancements.

Via: TechCrunch