What to know

- Results of a Reuter Institute survey suggest ‘very few’ people using AI tools daily.

- The mismatch between the ‘hype’ and ‘public interest’ in tools like ChatGPT, Copilot, and Gemini is at odds with big tech’s no holds barred-approach to everything AI.

- Limited AI capabilities and a pessimistic outlook for an AI-powered future appears to be the main reason behind the lack of AI adoption among users.

Artificial Intelligence is a loaded term. To some it is signifies the way of the future; to others, a disruptive technology here to rid them of their jobs. Although still in its early stages, big tech is betting big on AI-enabled tools like chatbots and virtual assistants.

However, several studies report that most people are just not that into AI tools. For all their capabilities, the hype surrounding AI may well be groundless. But what gives? Here’s a look at what recent reports have to say about people’s lukewarm reception of AI tools.

RELATED: 5 Ways AI-Powered Siri Will Be a Game Changer

Very few use AI tools like ChatGPT and Gemini – Report

A recent study conducted by Reuters Institute shows that very few people actually use tools like ChatGPT and Gemini. After surveying 12,000 people in six different countries, here are the main takeaways from the survey.

- A good chunk of people (20-30 percent) haven’t even heard of AI tools even though there’s a widespread awareness of generative AI.

- ChatGPT is the most used AI tool, two to three times more than Google’s Gemini and Microsoft’s Copilot.

- Young people are more likely to use generative AI tools. They also expect AI to have more impact on their own lives than that of older people.

- Frequent use of ChatGPT is rare: 1% in Japan, 2% in France and the UK, and 7% in the USA use ChatGPT daily. Only 5% have ever used AI tools to look up news.

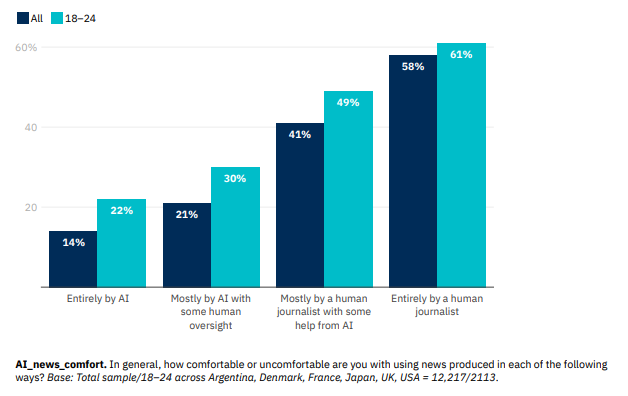

- Most people are uncomfortable with news produced by AI.

- People’s expectations when asked whether generative AI will make society better or worse are generally more pessimistic.

It’s been a little less than two years since OpenAI launched ChatGPT to the public and every AI tool that’s followed has been its derivate in one form or another. It’s no surprise, then, that ChatGPT is what people have most heard of.

When it comes to usage, however, the story is as old as time: New technology sparks curiosity, finds few users. But with all the hype surrounding AI and AI-powered tools, there’s more to the data than meets the eye.

A few hopes, many fears

Studies like the one mentioned above surface what we’ve suspected known all along, that AI tools are for a small minority of tech enthusiasts and digital creators. You’d hardly ever find your grandpa petitioning to get back ChatGPT’s Sky voice.

Many decry the implementation of AI tools to automate work, and rightly so, for its effect on job security is nothing if not dire. But worse still is when AI starts doing things that require any modicum of social trust. That is why, as the survey suggests, people are pessimistic about its impact on society at large, and are wary about it being used in journalism and news media.

Proportion that say they are comfortable with news made in each way

Specifically, people are generally more concerned about AI being used in ‘hard’ news topics like international affairs and politics than ‘soft’ topics like fashion and sports.

Even if it’s a small matter of summarizing missed meetings, it only takes one little mistake for people to stop trusting AI tools. Can you trust AI to save your job?

What LLMs and machine learning tools do best may not be suited for consumer products, writing journalistic pieces, or creating artistic works. They are particularly good at sifting through large chunks of data with a user-defined framework, writing emails that no one reads, and creating images when one isn’t available.

The fact that the heaviest ChatGPT users are in the 18-24 age bracket also suggests that AI tools are mostly still tutors and homework helpers.

Why there’s a mismatch between AI ‘hype’ and ‘public interest’

To be honest, it’s sometimes fun talking to ChatGPT, getting simple advise on everyday matters, and relegating meaningless tasks for the chatbot to finish. But anything beyond that requires a level of trust and capabilities that AI tools simply haven’t garnered yet.

AI tools also depend on how well the user queries or prompts. You can’t get AI to write Shakespeare on your first prompt. Even if you managed to get something approximating the bard, it may take you several dozen prompts. So, not everything is a ready-to-go for the first-time user. The time you spend prompting could be put to better use, like learning to write yourself, which is far more engaging and satisfying.

Generative AI also needs continual human input and creativity. If it feeds exclusively on its own content, the information in-breeding will eventually cause it to collapse or render information that is detached from human needs.

Comment

byu/throwawaybae228 from discussion

inwriting

Besides, most things that AI tools can do currently – things that actually matter – a human can do just as well, often faster and better. Surely you don’t need AI to change themes or turn your Bluetooth on or off. One really has to wonder then why Microsoft is hell bent on making PCs with dedicated Copilot keys.

With billions of dollars being invested, much of the hype surrounding AI has to do with what it could potentially do. The reality of what AI can do right now may not stir people’s imaginations, which is why the public hasn’t shown much interest. But for those who have an eye to the future, every little step leads closer to what we might call artificial general intelligence, or as we like to call it, ‘actually useful’ AI.

RELATED: Why Windows Recall Isn’t As Big A Privacy Threat as You Think

When will AI do my laundry?

What users really want to know is when will AI advance enough to do basic chores like the laundry or cleaning the house, perhaps even cook?

That’s one for those working on Artificial General Intelligence (AGI). Surely then we’ll be able to spend more time doing what we really like, like spending time with family and friends, art, and playing all those games. Such a future may look more and more like what Marx had in mind. But we’ve been sold utopian dreams before.

You know what the biggest problem with pushing all-things-AI is? Wrong direction.

I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.— Joanna Maciejewska—Myth-Touched is here! (@AuthorJMac) March 29, 2024

Jokes apart, what it really means is that most users will continue to overlook AI until it can do things that are actually useful. For basic chores, we will need AGI fitted into robots, which is still a distant dream. But between now and then, it’s quite likely that generative AI will find its niche. With a little more trustworthiness, several security and privacy guardrails, a bit of regulation, and less forcing AI down our throats, big tech may find the best use case for such tools eventually.

Because it’s still early days, it’s not easy gazing into the crystal ball. Like the early days of the web, there’s no foretelling how revolutionary this could be, if at all. But industry hopefuls will give it every chance of advancing to the next level in the hopes of profiting off the results whenever they happen to arrive. Until then, the AI hype continues to build. Whether or not users will buy into it, only time will tell.