On Saturday, a couple of interesting Google Pixel 4 images started circulating on Twitter, showing an oval-shaped cutout on the right of the detached bezels for the Pixel 4 and Pixel 4XL.

A lot of theories have come to the fore since then, but the majority of them hint at Project Soli integration. We are not sure whether the Pixel 4 devices will feature Project Soli or not, but the technology itself is fascinating, to say the least.

So, without further ado, let’s get to it.

What is Project Soli?

Google has assigned some of its best engineers to create a piece of hardware which would allow the human hand to act as a universal input device. In smartphones and wearables, we currently use the touch panel to interact with the system. Soli aims to remove the middleman (touch panel) by letting you interact with your device using simple hand gestures, without making contact.

How does it work?

The Soli sensor emits electromagnetic waves in a broad beam. The objects in the beam’s path scatter this energy, feeding some of it back to the radar antenna. The radar processes some properties of the returned signal, such as energy, time delay, and frequency shift. These properties, in turn, allow Soli to identify the object’s size, shape, orientation, material, distance, and velocity.

Soli’s spatial resolution has been fine-tuned to pick up most subtle finger gestures, meaning that it doesn’t need large bandwidth and high spatial resolution to work. The radar tracks subtle variation in the received signal over time to decode finger movements and distorting hand shapes.

How to use Soli?

Soli uses Virtual Tools to understand hand/finger gestures and carry out the tasks associated with them. According to Google, Virtual Gestures are hand gestures that mimic familiar interactions with physical tools.

Imagine holding a key between your thumb and index finger. Now, rotate the key as if you were opening a lock. That’s it. Soli, in theory, will pick up the gesture and perform the task associated with it.

So far, Soli recognizes three primary Virtual Gestures.

Imagine an invisible button between your thumb and index fingers, press the button by tapping the fingers together. Primary use is expected to be selecting an application, perform in-app actions.

Dial

Imagine a dial that you turn up or down by rubbing your thumb against the index finger. Primary use is expected to be volume control.

Slider

Finally, think about using a Virtual Slider. Brush your thumb across your index finger to act. Primary use is expected to be controlling horizontal sliders, such as brightness control.

Soli generates feedback by assessing the haptic sensation of fingers touching one another.

What are the applications?

As no mainstream device has implemented Soli so far, it’s hard to guess how it’d perform in the wild. But if all goes according to plan, the radar could become fundamental to smartphones, wearables, IoT components, and even cars in the future.

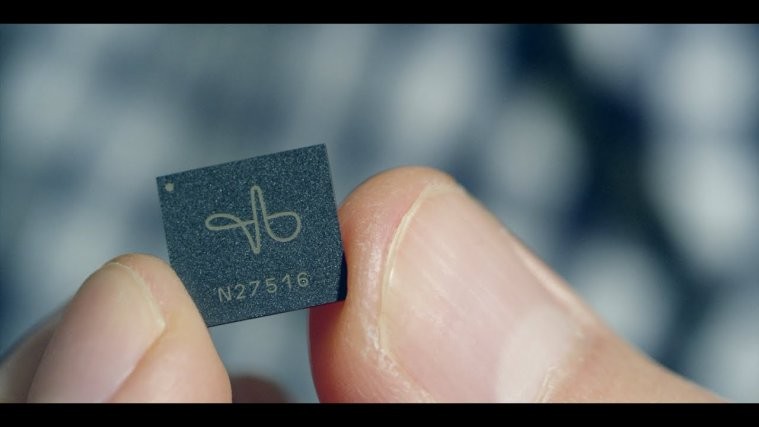

The radar is super compact — 8mm x 10mm — doesn’t use much energy, has no moving parts, and packs virtually endless potential. Taking all of that into consideration, a contact-less smartphone experience doesn’t seem that far-fetched.