What to know

- ChatGPT Advanced Voice Mode is missing many important features, such as multimodal capabilities and a hold-to-speak button, and is censored to the point of being unusable at times.

- On the other hand, it is also quite expressive and can speak in several languages, accents, and regional dialects. But it cannot sing or hum or flirt with you (OpenAI won’t let it).

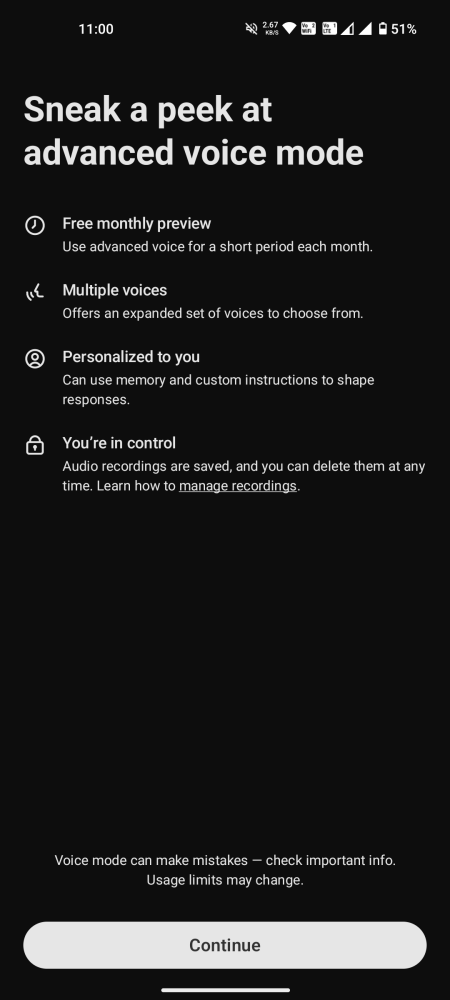

- ChatGPT Advanced Voice Mode is available to free users for 15 minutes a month. For Plus users, there’s a hard 1-hr daily limit.

Ever since the demo, we’ve all been itching to use ChatGPT’s Advanced Voice Mode. But several legal hurdles and launch delays later, it’s still quite significantly limited by restrictions, missing features, and a few misconceived options that fail to make it the stuff of movies we were led to believe.

For whatever little time that OpenAI lets you converse with the new model everyday, you can make a fair estimation of its abilities, its issues, and its potential. With that in mind, here are my brutally honest thoughts about ChatGPT’s Advanced Voice Mode – what’s great, what’s not, and why the dream of having an assistant with a sexy voice is still a few iterations away.

Advanced Voice Mode for all! But without the promised features

With the release of Advanced Voice Mode for all users (with a ChatGPT account on the mobile app), OpenAI now lets anyone have conversations with its so-called groundbreaking voice-to-voice model. Free users get no more than 15 minutes of conversation a month, while Plus users get about an hour a day, subject to a changing daily limit depending on server capacities. Once time’s up, you have to switch to the much slower and uninspiring Standard voice mode.

But before you get chatty, first temper your expectations. Because you’ll find a lot of features that were showcased during the demo currently unavailable for both free and Plus users. The Advanced Voice Mode is not multimodal right now and does not have the ability to analyze sounds, image, or videos. So it can’t read your paperback or tell which finger you’re holding up. I couldn’t get it to sing either or tell which instrument I was playing (guitar). So there are several promised features that are yet to arrive.

What the Advanced Voice Mode gets right

Even without the promised features, there are some things that ChatGPT’s Advanced Mode gets right. It’s not a lot, but it’s worth mentioning for fairness sake.

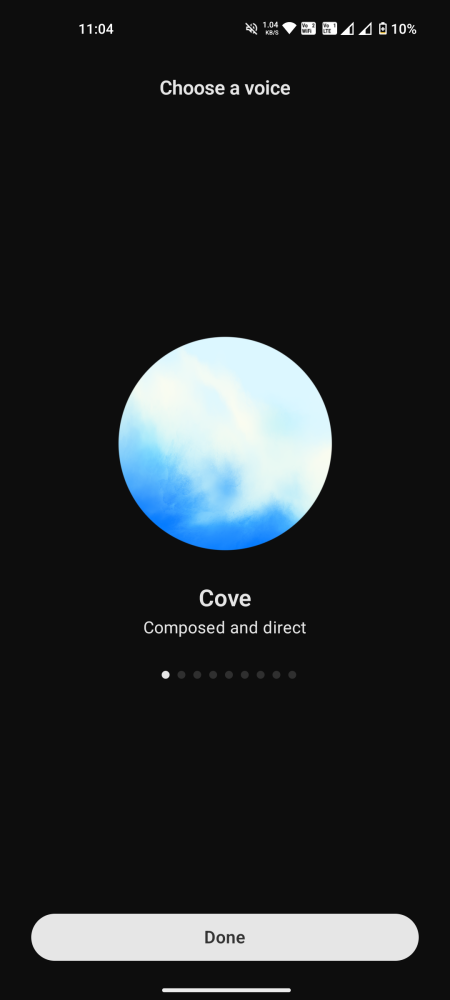

Decent voices, but no Sky

There are nine voices for you to choose from:

- Sol (F) – Savvy and relaxed

- Ember (M) – Confident and optimistic

- Arbor (M) – Easygoing and versatile

- Vale (F) – Bright and inquisitive

- Maple (F) – Cheerful and candid

- Juniper (F) – Open and upbeat

- Cove (M) – Composed and direct

- Spruce (M) – Calm and affirming

- Breeze (M/F) – Animated and earnest

Did I miss any? Oh yes, there’s no Sky. But the rest of the voices are by no means a downgrade. They’re diverse, varied, and sound quite intriguing. But as far as that one missing voice is concerned, it’s safe to assume that Sky is dead. So let’s move on.

Expressive, multilingual conversationalist

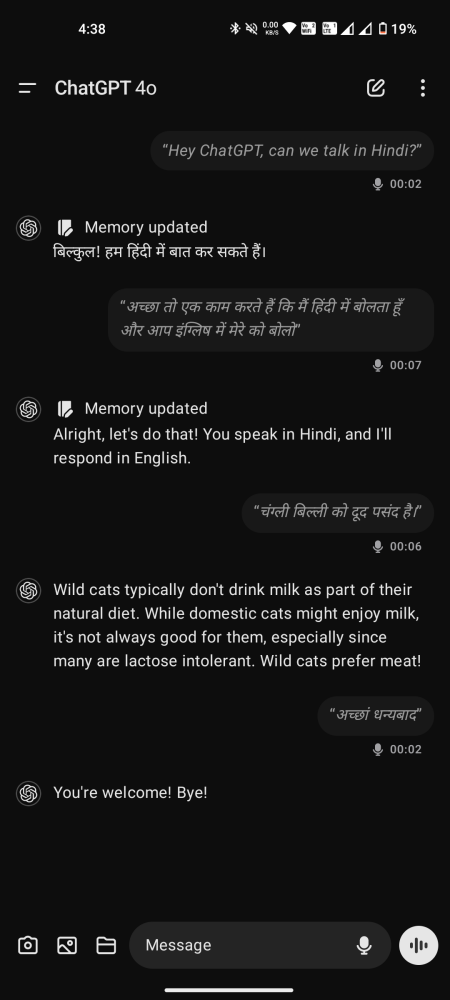

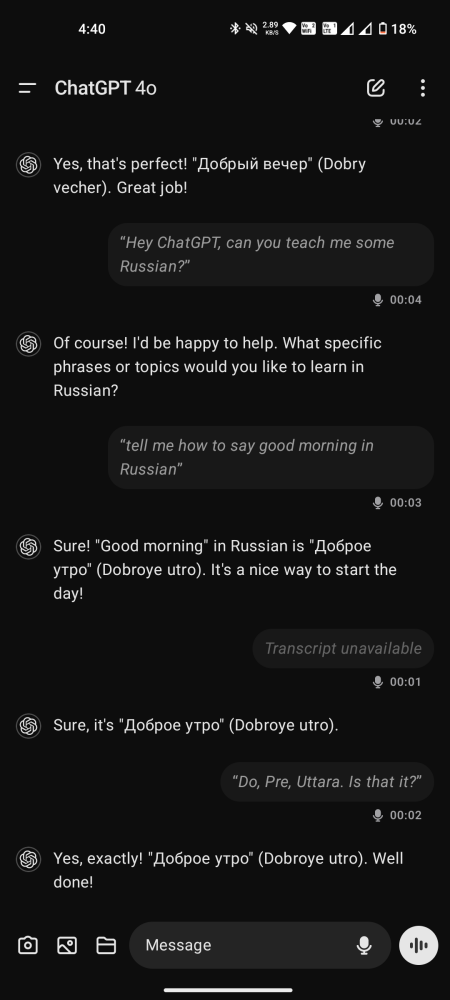

Whatever the complaints may be about the Advanced Voice Mode, one thing you can’t deny – it’s impressive. Compared to the standard mode, there’s very low latency which facilitates a natural dialogue. It can understand and speak in over 50+ languages. In fact, you could use it as a speech trainer, translator, or language teacher.

It doesn’t do voice impressions but will let rip a few accents if you ask it – from southern American to cockney British to Bengali and everything in between.

Compared to Gemini Live, the voices interact much better and don’t feel like they’re in a rush. So it actually feels like ChatGPT Advanced Voice Mode is there for you.

Can ChatGPT understand your emotions?

Uhh… arguably. Although OpenAI claims that ChatGPT can pick up on the tone and emotions of the speaker, some users are incredulous. While there are several users who think that ChatGPT can, some users think that it only infers tone from word choices and contextual clues.

One user posited that instead of “feeding the the audio coming from the user to GPT-4o directly, the user audio is translated to text first and the text is sent to GPT-4o to generate audio. This is why it cannot hear the tone or emotion in your voice or pick up on your breathing, because these things can’t be encoded in text.”

The fact that Advanced Voice Mode can be used with GPT-4 as well (which has text-to-voice, not voice-to-voice) leads us to believe that ChatGPT, in fact, cannot understand tone.

On the other hand, there are others who say that it does. So the jury is still out and debating.

ChatGPT Advanced Voice Mode Limitations

Now, let’s get down to brass tacks. Because no matter how inspiring the demos may be, what matters is what you and I can actually do with it ourselves. And unfortunately, it’s not much. Here’s why.

Heavily censored and restricted

All AI chatbots tend to err on the side of caution, perhaps a little too much. It’s understandable – the companies don’t want their chatbots forming opinions or spouting things that could give them a bad press. But there’s a thin line between prudence and censorship, and ChatGPT’s Advanced Voice Mode is firmly on the latter side.

Talking about anything explicit or controversial is off limits, which is fine. But the rails are so tight that Advanced Voice mode sometimes refuses to talk about the most harmless requests. Those who’re checking out the free trial may not have enough opportunity to come across such issues. But Plus users, who get a little more conversation time, are bound to stumble upon it from time to time.

Knowing that ChatGPT could possibly deny your request and leave you hanging is troublesome and disappointing.

Interruption threshold is very low

One thing that most users agree is that its interruption threshold is very low. The slightest of pauses will trigger ChatGPT to think that it’s now its ‘turn’ to speak. If you pause for more than a second, ChatGPT will start talking. This can be problematic, not least because we all need some time to think before we speak, even if it’s very briefly.

Having to jump back in so you can finish asking your questions again and again can derail your train of thought and make it impossible to have anything other than surface-level conversations. This issue could easily be solved if there was a hold-to-speak button.

Unfortunately, the hold-to-speak button, present on the Standard voice mode, is missing on the Advanced voice mode. There’s only a Mute and an End call button. As a result, you can’t have any long thought pauses in your request, or ChatGPT will jump in at what it perceives the end of your request.

Compared to other more sticky issues such as topic restrictions, this one is more amenable to a fix. By simply adding a hold-to-speak option in the UI, the ChatGPT Advanced Voice Mode could be ten times more user centric.

Having access to the transcript is useful. But there will be some requests missing from the transcript even though ChatGPT understood and answered appropriately.

Other (weird and creepy) issues

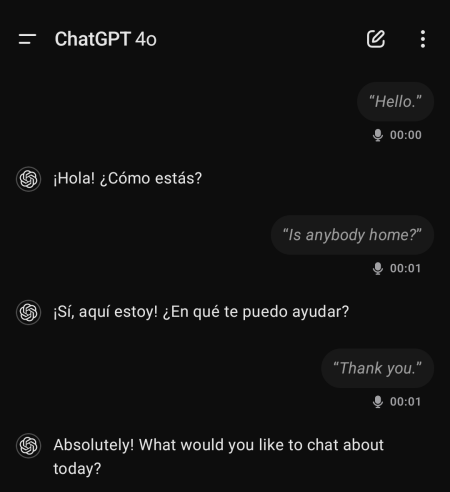

Some disturbing and often inexplicable instances do emerge while talking to ChatGPT’s Advanced Voice Mode. A couple of times, ChatGPT started the chat in Spanish even though I’ve never had interactions in that language or changed its settings.

One user mentioned that ChatGPT once “screamed or no reason, another time the voice sounded very robotic, and another time it used a completely different voice”.

These could be hallucinations manifesting in the voice model, or something more nefarious. Whatever it is, it’s not good.

Verdict

Even after a delayed launch, it appears that the ChatGPT Advanced Voice Mode is not usable for daily interactions. Currently, it’s just another fancy AI game, albeit with a very high ceiling.

Topical restrictions and token limitations notwithstanding, the ChatGPT’s advanced mode is very much a work in progress that is yet to be decked with the features OpenAI demoed with such fanfare.

OpenAI once feared that users might end up forming emotional bonds with the voices. But it may be getting ahead of itself. From the UI to the chat restrictions, there’s more than enough room for improvement.

But for now, there isn’t much that separates advanced voice mode from the competition. If anything, it underperforms in terms of free availability while Gemini Live, flawed as it may be, anyone can access.