What to know

- Meta’s large language model Llama 3 is available for you to download and run locally on your system.

- Download Meta Llama 3 from Llama.meta.com and use an LLM framework such as LM studio to load the model.

- You can also search for and download Meta Llama 3 from within LM studio itself. Refer to the guide below to get detailed instructions for the same.

Meta’s latest language model Llama 3 is here and available for free. Though you can use Meta AI, which runs the same LLM, there’s also the option to download the model and run it locally on your system. Here’s everything you need to know to run Llama 3 by Meta AI locally.

How to run Llama 3 by Meta AI locally

Although Meta AI is only available in select countries, you can download and run the Llama 3 locally on your PC regardless of your region. Follow the steps below to run Llama 3 by Meta AI locally.

Step 1: Install LM Studio

Firstly, let’s install a framework to run the Llama 3 on. If you already have another such application on your system, you can skip to the next step. For everyone else, here’s how to get the LM studio:

- LM Studio | Download link

- Use the link above and click on LM Studio for Windows to download it.

- Once downloaded, run the installer and let the LM Studio install.

Step 2: Download Meta’s Llama 3 locally

Once you have your LLM framework, it’s time to download Meta’s Llama 3 to your PC. There are a couple of ways in which to go about it.

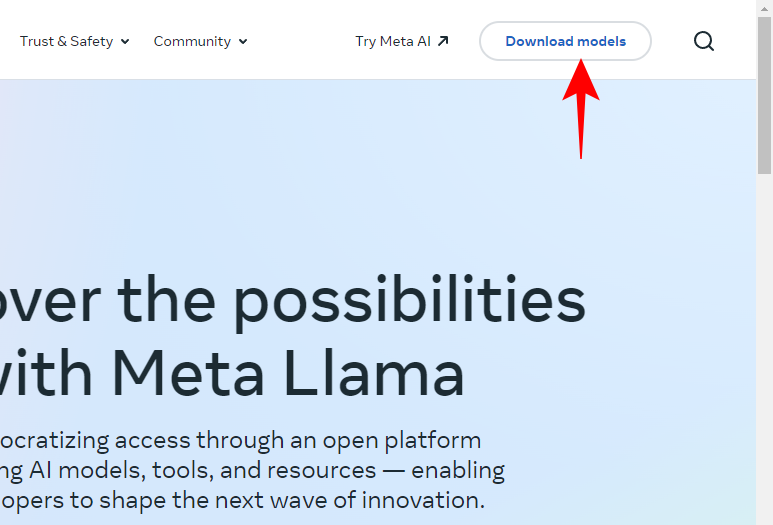

- Open Llama.Meta.com and click on Download models.

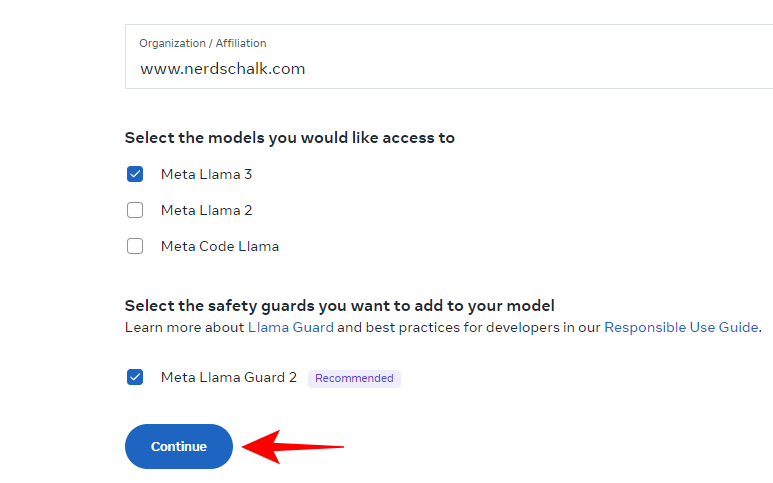

- Enter your details, and request to download the LLM.

If the aforementioned doesn’t work out, fret not. You can also use your LLM framework to download the LLM.

- Simply search for Meta Llama in LM Studio’s search field.

- Here, you’ll find various quantized LLMs. Select one.

- On the right, select your preferred version. Click on Download next to it.

- Wait for the download to finish.

- Once done, click on My models on the left.

- And check if download is complete.

Step 3: Load the downloaded model

- Once the download is complete, click on AI chat on the left.

- Click on Select a model to load.

- And choose the downloaded Meta Llama 3.

- Wait for the model to load.

- Once it’s loaded, you can offload the entire model to the GPU. To do so, click on Advanced Configuration under ‘Settings’.

- Click on Max to offload the entire model to the GPU.

- Click on Reload model to apply configuration.

Step 4: Run Llama 3 (and test it with prompts)

Once the model loads, you can start chatting with Llama 3 locally. And no, since the LLM is stored locally on your computer, you don’t need internet connectivity to do so. So let’s put Llama 3 to the test and see what it’s capable of.

Testing censorship resistance

Testing understanding of complex topics

Testing for hallucinations

Testing for creativity and understanding

By most accounts, Llama 3 is a pretty solid large language model. Apart from the testing prompts mentioned above, it came through with flying colors even on some more complex subject and prompts.

We hope you have as much running Meta’s Llama 3 on your Windows 11 PC locally as we did. Until next time!